pylon AI Agent#

DL models are packaged in bundles, together with hardware configurations, etc., and released via the pylon AI Platform. After they have been released, use the pylon AI Agent to deploy models for pylon AI vTools on the Triton Inference Server.

The Triton Inference Server handles the inference process. In the context of deep learning, inference means that a trained model is applied to new, unseen data and makes predictions about the content.

Overview of the pylon AI Agent#

There are two ways to start the AI Agent:

- From the Workbench menu of the pylon Viewer

- From a vTool's settings dialog

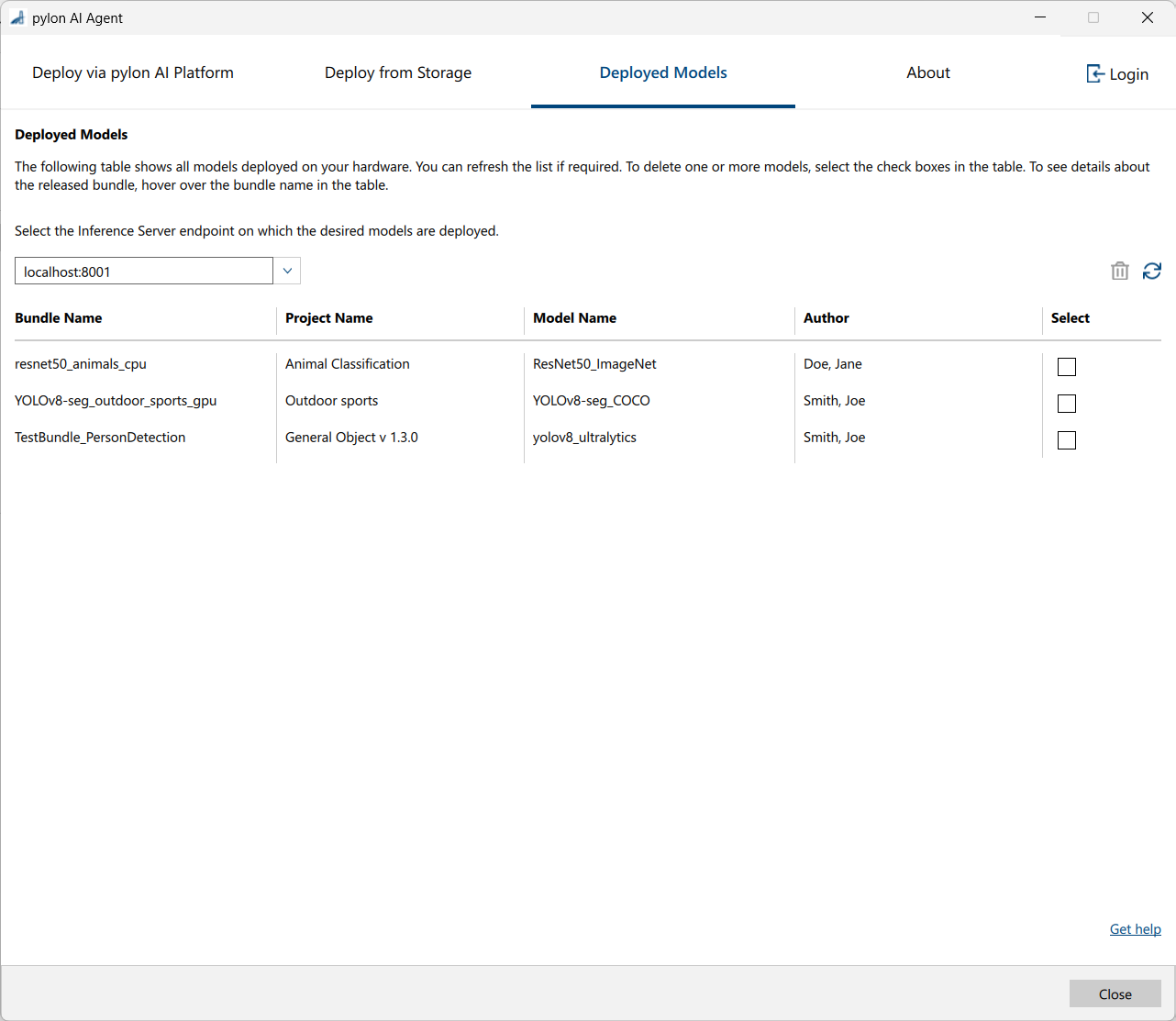

Both methods open the AI Agent on the Deployed Models tab. Here, you can check which models have already been deployed.

To deploy models, you can choose between deployment via the pylon AI Platform or from storage, e.g., your hard drive or a flash drive.

Deploying via the pylon AI Platform#

If you want to deploy models via the pylon AI Platform, you have to be aware of the following:

-

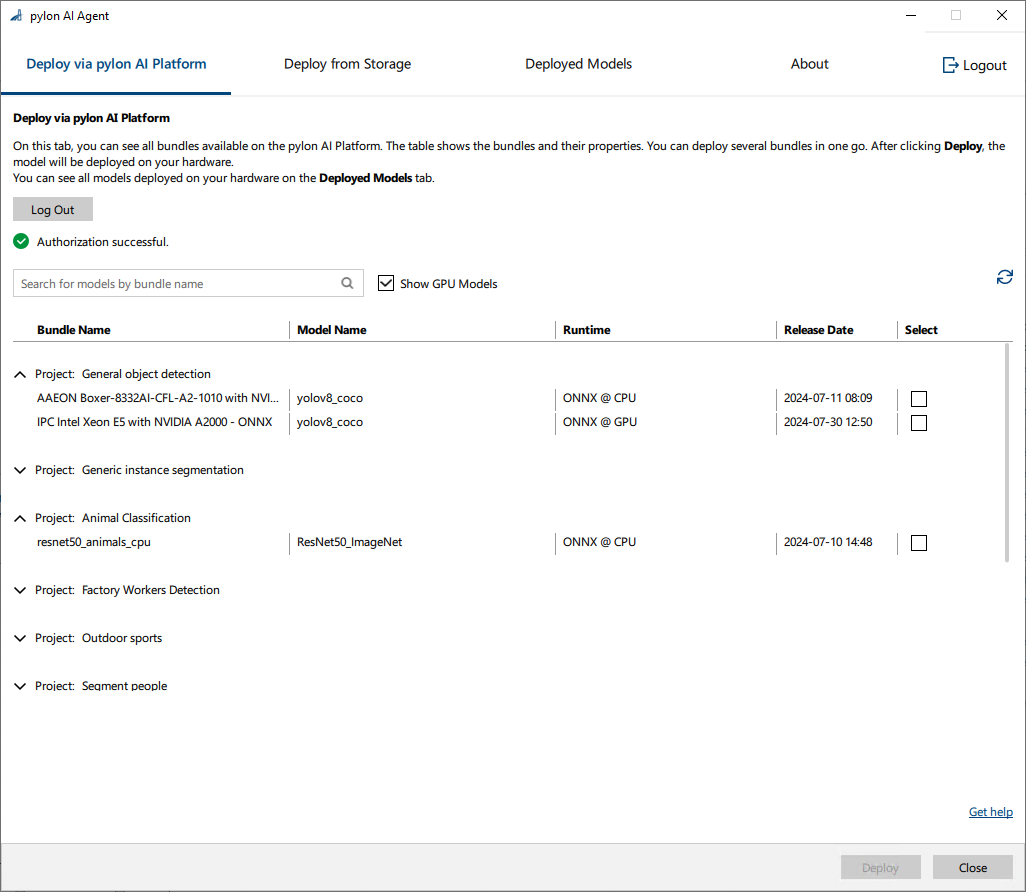

If you have opened the AI Agent from the Workbench menu, you see all available models for all vision tasks on the Deploy via pylon AI Platform tab.

-

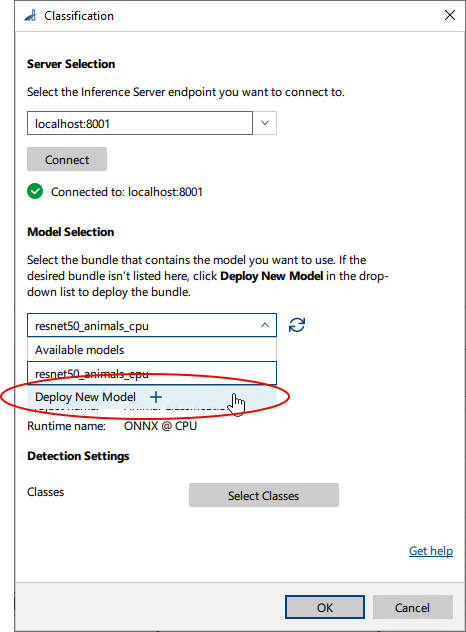

If you have opened the AI Agent from the settings dialog of a vTool, you only see models that are suitable for the vTool you are currently configuring. For the following screenshot the AI Agent has been opened from the settings dialog of the Classification vTool.

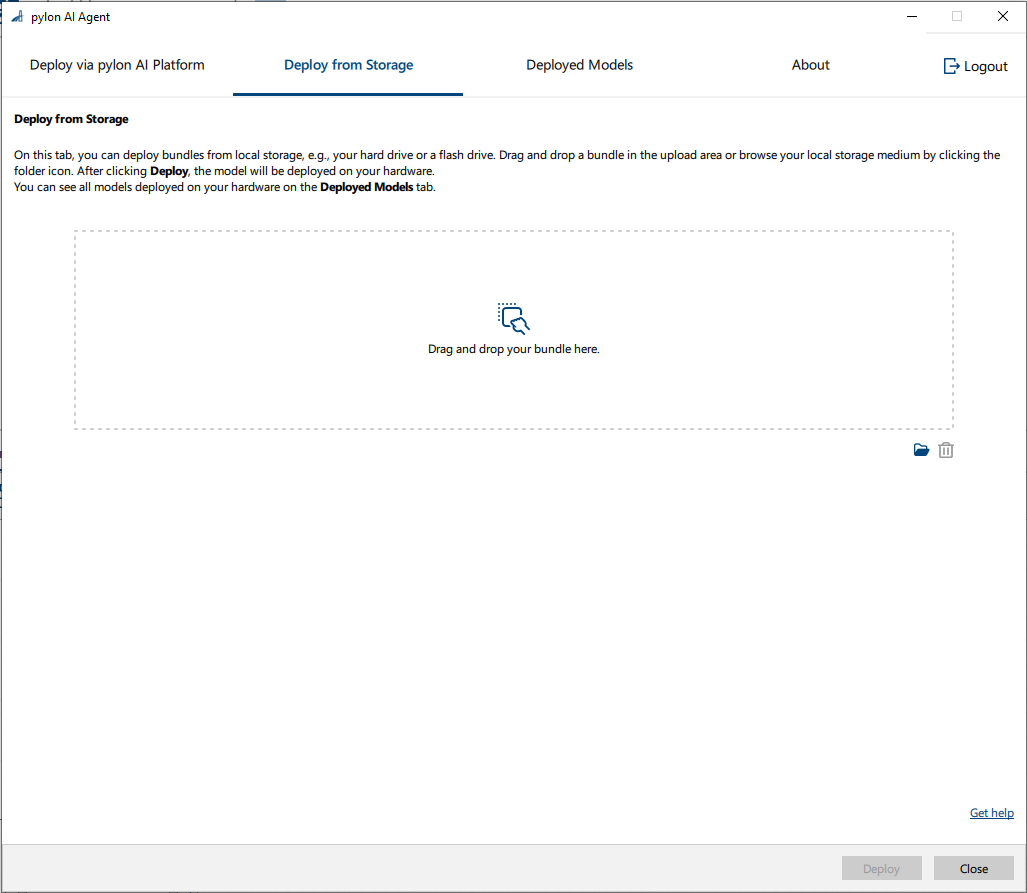

Deploying from Storage#

If you want to deploy models from storage, you first have to download the bundles that contain the desired models from the AI Platform onto your desired storage medium.

Deploying Models#

- Start the Triton Inference Server.

For instructions, see the Install document included in the pylon Supplementary Package for pylon AI. - Start the pylon AI Agent by one of the following methods:

- From the Workbench menu of the pylon Viewer

- From a vTool's settings dialog

The pylon AI Agent opens on the Deployed Models tab. Here, you can check which models have already been deployed.

-

Decide whether you want to deploy new models from storage or via the pylon AI Platform.

-

Deploy from Storage

- Download the desired bundle from the pylon AI Platform if you haven't done so already.

- Go to the Deploy from Storage tab.

- Either drag and drop the bundle onto the drop area or click the folder icon to browse to the desired bundle file.

This can be a local or remote storage location. - Click Deploy.

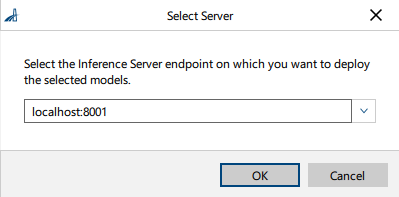

- In the Select Server dialog, select the Triton Inference Server on which you want to deploy the models.

- Click OK.

The deployment starts immediately. A message appears when the deployment has completed.

-

Deploy via pylon AI Platform

- Go to the Deploy via pylon AI Platform tab.

- Log in to the pylon AI Platform by clicking the Log in button.

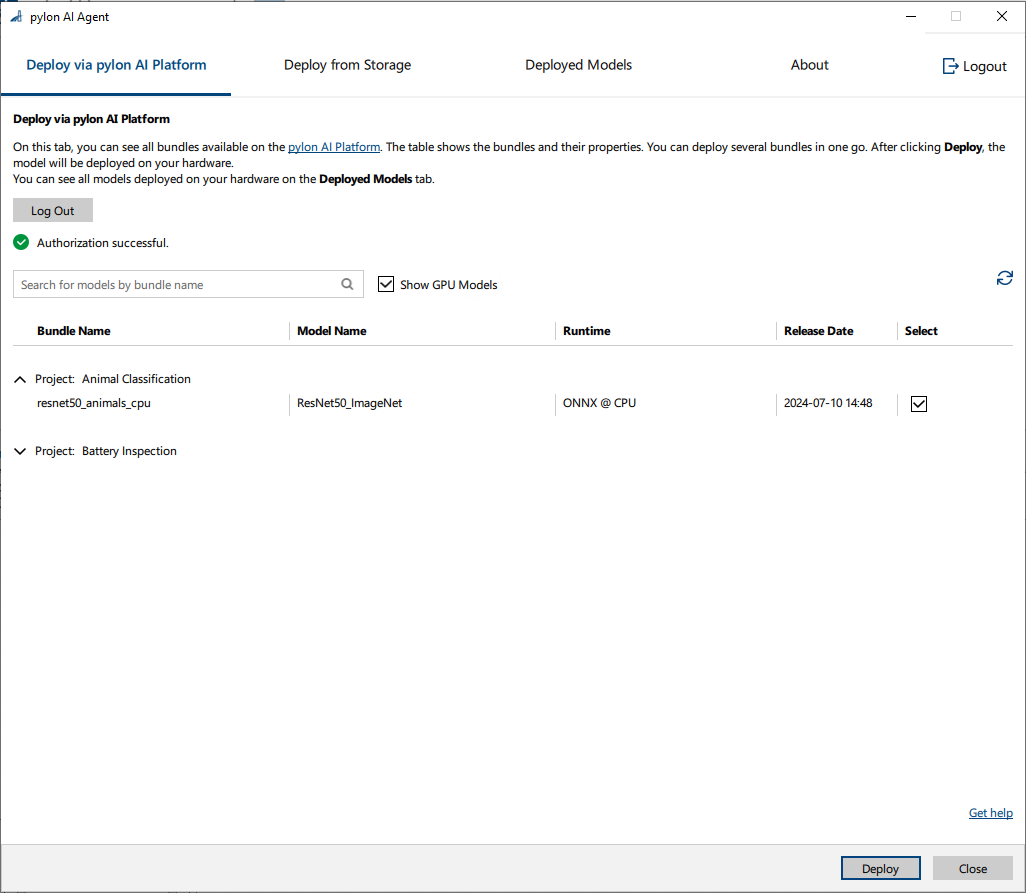

You're taken to the AI Platform where you can sign in. Afterwards, the web page should close and you should be returned automatically to the AI Agent. If that doesn't happen, return to the AI Agent yourself to continue. - Select one or more of the bundles listed in the table.

- Click Deploy.

- In the Select Server dialog, select the Triton Inference Server on which you want to deploy the models.

- Click OK.

The deployment starts immediately. A message appears when the deployment has completed.

-

General Considerations#

- Model naming: You can't deploy multiple models with the same name on the Triton Inference Server. Each model must have a unique name within the Triton Inference Server's model repository. Unique names are required because the Triton Inference Server uses the model name as an identifier to manage and route inference requests to the appropriate model. If there were two models with the same name, the server wouldn't be able to distinguish between them.

- Network latency and bandwidth: Ensure sufficient bandwidth is available. Large models can be time-consuming to download, especially over a network with limited bandwidth.

- Sufficient local storage: The Triton Inference Server needs sufficient local storage to cache or temporarily store the models downloaded. If local storage is limited, you may run into issues when deploying large or multiple models at the same time.

- Running server and vTools remotely: If you're running the Triton Inference Server on a remote computer and are also running the AI vTools from another location, be aware of the following important restrictions and considerations:

- The time it takes for data to travel between the vTools making the inference request and the Triton Inference Server can impact the overall response time.

- High latency can slow down the inference process, especially for real-time applications.

- Limited network bandwidth can lead to slow or unreliable communication, particularly when transferring large amounts of data.